Culture

When air becomes music: An example of how biology deals with data

Published

4 ans agoon

[simplicity-save-for-later]

This article is mainly based on the book » This is your brain on music » by Daniel J. Levitin, and slightly on the work of Oliver Sachs. I tried to imbue it with a personal touch but at the end of the day I’m just standing on the shoulders of giants and I would be remiss if I didn’t urge you to go back to the source, where you would find the real deal.

I always wondered, like with the chicken and the egg, whether the music mind was an intentional result of the evolutionary process that any currently existent physiological function undergoes like the perception of colors, sounds, and scents for instance, or was it a product of some ingeniously discovered brain hacking, maybe something similar to drug-induced frenzies and hallucinations. It is surely a curious affair, but whatever the answer might be one thing remains certain: the human brain is equipped to perceive and eventually respond to music somehow differently than it would to normal sound.

We know that one remnant feature of our mammal predecessors’ lifestyle is the reflex of responding to a specific sound, or at least some inherent element defining and distinguishing it. We know that humans (as well as a considerate number of other animal species ) for example are wired to react to particular auditory stimuli, a baby’s scream, for instance, is set to trigger a reaction from the mother. This kind of response is very interesting to monitor with a functional MRI where you actually can see defined parts of the brain activating along with the infant’s cry.

Some animal species tend to be startled by the noises characteristic of their natural predator, so even if isolated shortly after birth and thus never having heard it before, a fight or flight reaction is still triggered. It is an innate reaction, it’s simply wired in. Music, on the other hand, seems to work on a deeper level, it’s much more complex and it relies on the brain’s ability to recognize intricate patterns and then to relay the right commands to the right structures. Unfortunately, the exact mechanisms of it all are not fully understood at the moment, and saying that the science explaining the musical mind is vast can only be an understatement, so I doubt that I can in this relatively short article lay down more than a fraction of it.

Yet, that doesn’t matter since that tiny fraction was enough to have me in awe, and hopefully so will you. I’m here trying to share a glimpse of what makes music magic, from its fundamental building blocks to the way it is processed in the brain and hopefully, together we would answer life’s most critical questions: why do I feel blue when I listen to the Smiths? Why do I feel the urge to dance when Soft cells ‘version of « Tainted Love » is being played? And why can’t I help but sing along to « Bohemian Rhapsody »?

It is almost impossible to set a value to a given perception since it is in a sense the very definition of reality, and so nothing can get more subjective than that. This doesn’t mean we can’t work with that notion we might be able to judge its usefulness in a given environment. So for the sake of the argument let us consider the average healthy human as a point of reference and let us establish our notion for perception: the power of perception. By definition, the average healthy human would have a mind perceiving just a fragment of all reality and that fragment is sufficient to survive and thrive in ( and only ) in that same reality.

We decrease that power of perception and we get cases like blindness, deafness, or more fringe and exciting complications. We increase it and we could get either superhuman-like abilities or very debilitating conditions. With that said I’m taking the liberty of establishing a further notion, let’s call it meaningfulness of perception, this would be the optimal perceptual power, which extends beyond the average healthy yet never inconvenience a person’s living.

Now we get to ask a question: what makes a perception meaningful? Perception is as meaningful as the amount of information held by a signal, as the capacity of the sensor to extract that said information, and then how well it is integrated into a whole. We could compare this last part to processing in a computer. This principle is important to understand since everything that exists can be in principal converted into « data » as long as you have the right sensor and the right way to process everything, or at least that’s the way nature managed to have living things around for so long.

Now, humans changed that when they started to mess around with the signals, eventually they succeeded to bypass the source and either duplicate it or create a new one, here of course I’m talking about inventions as simple as speakers and headphones. So when we talk about music and the phenomenon behind it we are dealing with a pretty meaningful perception of what partly constitutes reality.

To begin understanding music we have to strip it down to its core element: the sound wave. Sound propagates itself as a wave, which is interestingly enough a very efficient vessel for information. To dive a little bit deeper, try to visualize dropping a pebble on a smooth water surface, ripples would form, circular waves that spread outward. Those waves represent the trajectory of energy inside the water, moving in an oscillating manner pushing water particles on their way.

Now instead of using the water and the pebble, we struck a guitar string, the same thing happens, energy starts to move around. The shape of the string limits its propagation to two dimensions instead of three but the principle stands the same. Now since air too is made of particles that can be pushed around, the string will whip some of its energy into the air which allows the propagation process to take place there, again abiding by the same principle. Now a wave holds properties the most important being speed, frequency, and intensity.

Speed depends on the material inside which it exists, that’s why for example the speed of sound is more or less a constant and that’s why inhaling helium ( A gas 3 times less dense than air ) increasing this speed makes your voice sound funny. Frequency also depends on the properties of the material, so in a guitar string, it can be altered by loosening or tightening, when we later get to talk on a less physical and more conceptual level we will refer to it as pitch. Intensity is what is seen mathematically and intuitively as the height of the wave, it depends on how hard you pluck a string, this parameter defines loudness.

Other elements characterizing a sound are also derived from the wave, so it’s even more informative with « data » such as contour, rhythm, tempo, timbre, spatial location, and reverberation. These are not technically intrinsic to the wave itself but they describe its state in the outside environment and its interaction with a multitude of similar or different waves. Contour describes the overall shape of a melody, which translates to whether sound seems to go up or down, to visualize the difference I propose the word » EETA » for up and « ATEE » for down.

This effect is coded as a difference between two pitch frequencies in the melody. Rhythm, briefly, is the relationship between the length of a note and another. You can argue that music, stripped of its rhythm, goes back to being just sound. Tempo is the pace of the music piece or if you want, it represents how fast a musical piece is being played, it must not be confused with rhythm since both vary independently. These last two play a major role in the emotional impact a piece of music possesses.

Timber is the thing that distinguishes one instrument from another. You hear a piano, you’ll know it’s a piano and not a guitar or a violin, even when it is the exact note is being played. This type of auditory data is what allows us also to recognize familiar voices and distinguish thunder from rain. Since two identical guitars would have the same timbre it is no surprise that this parameter is also related to the material from which emanates the sound. Reverberation as explained by Daniel J Levitin » refers to the perception of how distant the source is from us in combination with how large a room or hall the music is often referred to as « echo » by laypeople, it is the quality that distinguishes the spaciousness of singing in a large concert hall from the sound of singing in your shower ».

This element exploits neural circuitry intended to perceive sound in the context of space and adds an undeniable emotional value to music. A great example of this is the work of Bon Iver mastered reverberation creating this hauntingly beautiful atmosphere in songs like » Re: stacks » or » Flume « .

The elements I just enumerated qualify as fundamental perceptual attributes. When they are put together or processed by the brain higher level concepts emerge: meter, harmony, and melody.

Frankly, my explanation of these lower-level concepts was just a watered-down version of the whole deal, each of those would have taken pages to fully develop, liberty that I lack. But as a compromise and I suppose as the necessity dictates for the sake of a more intelligible read I’m going to attempt expanding on some of them.

While frequency defines the physics of a sound pitch refers to its perception. This can be compared to colors and wavelengths. Audible sounds range from 20Hz to 20,000 Hz but the acuity of the hearing withers at the extremes. Even though we theoretically have an infinite number of pitches each corresponding to a different frequency ( Pitches exist in a continuum of audible frequencies ), we would only notice a change, a transition from one pitch to another if the difference exceeds a certain threshold. That threshold is not the same for everyone and it is linked to the sensor cells ‘density that we would find in a specialized ear tissue.

As an estimate, a human being can’t distinguish two pitches closer than one-tenth of a semitone. If you never studied music before, you must be confused about the semitone, bear with me, I’m getting to it. So we are all familiar at least somehow with the musical scale or more commonly known as the Do Re Mi. A modern piano holds 88 keys, 52 whites and 36 black, let’s forget about the black ones for this one.

If you start hitting one key after the other in the order you will notice that after 7 hits what comes out is a slightly different variation of the same notes you just played. And most people know that after you go from Do to Si you go back to Do. So something about this is cyclic. But the Do of the first cycle doesn’t sound the same as the one after and the one before it, it might seem higher or lower. In music jargon, we call each cycle an octave.

Here’s the wizardry behind that: For a given octave each note is defined by a pitch frequency meaning that if we take two pianos and we try to get them to sound the same we would tighten their strings in a way that when hit they vibrate at the same frequency. That’s what tuning means, by the way, setting the instrument to vibrate in specific frequencies. Let’s take a random frequency of 25 Hz for example if you wanted to hear the same sound at a different octave you have to multiply it by an integer ( N * 25Hz ) which would give us 50Hz, 75Hz, 100Hz … In the western musical traditions an octave is divided to 12 semi-tones, it’s an arbitrarily chosen unit of time.

The pitches chosen to form the musical scale were arbitrarily picked, but not at random. You see if you take a note and the one just after it in a standardly set and tuned instrument you’ll notice each time an increase in the frequency at a constant rate of 6%. That makes the notes equally spaced in our ears even though they are not ( The distance between Do and Re is Do+Do*6% while the distance between Re and Mi is Do+Do*6%+Do*6% *6% ), this is made possible by the brain’s ability to recognize proportional change, which is if you think about it very impressive.

The sensitivity to the spacing between notes is especially useful when building melody. Melody, in a nutshell, is the shape of the music, it doesn’t care about pitch individually but the relationship between those pitches, the distance between them. To help you see it, imagine you’re holding a guitar. You have your fingers on any given combination of frets and you strum the strings in any given order, now you slide your fingers without changing that combination you just transfer it as it is. If you didn’t mess up my commands and strum the strings in the same order you would hear something very similar to the first, the element responsible for the similarity is the melody. When you hum a song, you hum its melody.

At this point, you should be confused, if the pitch frequency sets the note and if generally speaking two different types of instruments could access the same frequency, why don’t they all sound the same, how the heck do we explain timbre?

In the 50s a composer named Pierre Shaeffer experimented. The first recorded the same musical piece using different instruments then edited out the first instant of every note. That instant corresponds to the action responsible for the initial transfer of energy that we talked about earlier, dropping the pebble, strumming the string, it could also be hitting a bell with a hammer or whatever we use to play an instrument, we call it the attack. What Shaeffer found out after piecing everything together is that without that attack people could not recognize the instrument in play. Cool right?

Explaining these strange results requires revising the notion of how pitch manifests itself on a non-theoretical ground. When I said a string vibrates at a specific pitch I undersold it since in reality, it vibrates at several frequencies all at once, this is a physical property to all things. And if you ever heard the word harmony and never bothered checking its meaning well it is somewhat that, a sound made of layers of pitches. In a harmonious sound, the lowest pitch is referred to as the fundamental frequency, the rest are collectively called overtones.

The overtones are always integer multiples of the fundamental frequency, so if we have a fundamental frequency of 50Hz, the overtones would be 100Hz, 150Hz, and so on ( Even though sometimes it would be just approximately that multiple ). When dealing with such a multi-layered sound the brain, who first detects each pitch separately, synchronizes the neural activity associated with each one to eventually create a unified perception of the sound.

We must never forget that we are still talking about waves, and these have intensity and that determines loudness. Considering that the loudness of each could vary independently we end up with an infinite number of combinations. We call those combinations the overtone profile, we can look at it the fingerprint of an instrument’s characteristic sound. Nowadays we can isolate these overtones and create basically from scratch new sounds never heard before and even impossible to find in nature ( And this is just one way to do it ).

In addition to the overtone profile, two other elements help shape the singular aspect of a sound: attack and flux. The attack allows us to understand the Shaeffer experiment: When we introduce energy to a material, it propagates as a bunch of waves, but with frequencies that don’t follow the proportional relation the same way overtones do, this only continues for a short time before stabilizing into a pattern associated with an overtone profile. This means that a strike of a bell, the strumming of a string, and the blowing inside a whistle impacts in the first instant, the way the instrument sounds.

Flux on the other hand describes the way a sound changes over time after it started. If you’re interested in the impact of timbre in music, I can’t find a better example than » Bolero » de Ravel. Ravel had brain damage and by the time he wrote it, he was pitch-blind. In this piece, you’ll notice that virtually every element in the music keeps repeating but the timbre. You would think repetition would make it redundant and boring, but with each time a different instrument plays the same melody, it just keeps on coming and it is simply brilliant.

Okay, all that sounds nice and all, but as long as it is airborne and unprocessed, music is yet to be born. Faithful as we are to the Socratic method, we ought to ask another question: How does vibrating air become music? Well, the phenomenon starts when the wave finds its way inside the ear and hits the cochlea. This is that specialized tissue I mentioned earlier, it’s essentially a membrane covered with auditory sensor cells. You can think of them as tiny joysticks, when the air particles hit, they wiggle and when they do, they send signals to the brain.

The mechanisms underlying how we get from mechanical energy to an electric nervous signal, are mostly biochemical, and that, so not to say boring is certainly an acquired taste, so let’s just move on to something sexier: neurons. We can find neurons or neuron-like cells in numerous structures all through the body, although it hardly compares with the insane concentration we find in the brain. These cells are interconnected, there are nearly a hundred billion neurons inside of a human brain and they form networks so complex that it allows for a computational system potent enough to support the emergence of thoughts, perceptions, and consciousness. Seeing that music falls under the realms of perception we will focus on how that translates neurologically.

Perception is said to be an inferential process and unless you’re a nerd I doubt that makes sense to you. Let me put it differently: Perception, as achieved by the brain, could be summed up as experiencing what is there and what could be there. The second part stems from an evolutionary attribute we got from our ancestors’ struggle with things that could kill them, so when you look from a distance and you detect something strange, maybe a pixel that doesn’t blend with the rest, to urge you to run away instead of standing there trying to figure out whether it’s a darker bush or crocodile coming to get you, your brain will show you a crocodile.

Another role for this inferential way of doing things is dealing with data that gets lost in transit. When information is missing the brain calculates what could have been there and then replaces it. Of course, to attain an end-product unifying the real and the probable, two different approaches to processing come into play. The first -dealing with what is there- is called bottom-up processing and it relies on two steps: A process of feature integration that consists of decomposing the signal and extracting the lower-level perceptual elements, for instance, the building blocks of music. The other is a process of feature integration, here, since we have distinct elements, the brain deals with each separately, but all at the same time, managing several tasks all at once.

This phenomenon is particular to the brain which utilizes parallel neural circuits. This is also why people with brain damage or a congenital neurological dysfunction might fail to perceive one feature of music but have no problem with the others. This approach is considered as low-level processing and thus handled by structures in the brain that evolved relatively earlier than more sophisticated counterparts that consequently manage higher-level processing. That is, higher-level processing retrieves data from the lower one and crafts a further understanding of what you’re working with, thanks to that we can look at a succession of weird-looking shapes and recognize words.

The second, the one that shows us what could be there, is called top-down processing, it takes place in the frontal lobe. This function gets constantly updated with information coming from the lower-level processes and other parts of the brain so that in the end it predicts what is more likely to happen. In the case of music that information would be what you already heard in the same song, what you remember of similar songs, if not it would be songs from the same genre and style, even information less related to music and more to the environment around you is taken in account.

The reason we are susceptible to auditory and visual illusions can be found in the dominance of the top-down processing over the bottom-down processing, this practically means that the predictions somewhat transpose the perceived reality. What comes out of both is integrated into higher-level processes which give us in the end a constructed perception, in our case music. Exploiting the balance between the predictable and the unpredictable is very important when trying to write thrilling music. The unexpected is associated with novelty which turns into excitement, this only applies for reasonable doses, abuse it and you create something the mind can’t follow and so it becomes irritating.

For the frontal lobe to make a prediction it partly relies on retrieved music-related data from somewhere, implying a storage solution for musical information. This brings us to the wonders of neuroplasticity, to state its fundamental thesis briefly, neuroplasticity refers to the fact that when neurons activate they change, both morphologically and concerning the connections they establish within a network. This means that these so-called networks keep transforming again and again. Memory is said to be a function of those connections. This may not sound impressive said like that but you must remember that a human brain holds 10 hundred billion neurons, so the number of possible combinations you could attain is difficult to grasp.

Seeing that we are talking about the storage of music, I might as well share with you a phenomenon so cool it’s almost unbelievable: Musicogenic epilepsy. Epilepsy is essentially neurons that start firing pathologically, this causes neurological circuits to activate and can cause behavioral symptoms such as seizures. In some rare cases, the faulty wiring in their brain affects circuits where music is stored, causing patients to hear music, in their heads, clear as the first time they experienced it.

To end this article I must express how grateful I am that music exists. It is one of the greatest gifts that life bestowed upon us and unless new evolutionary data emerge it is a rare sign of nature’s unconditional love for humanity. At the risk of stealing a lyric from an old Irish song, » For sinking your sorrow and raising your joys » nothing compares to it, so as long as your brain function is intact and your spirit is alive accept music not as simple entertainment but as a way of interfacing with a reality that we still can’t fully comprehend.

Articles similaires

You may like

Culture

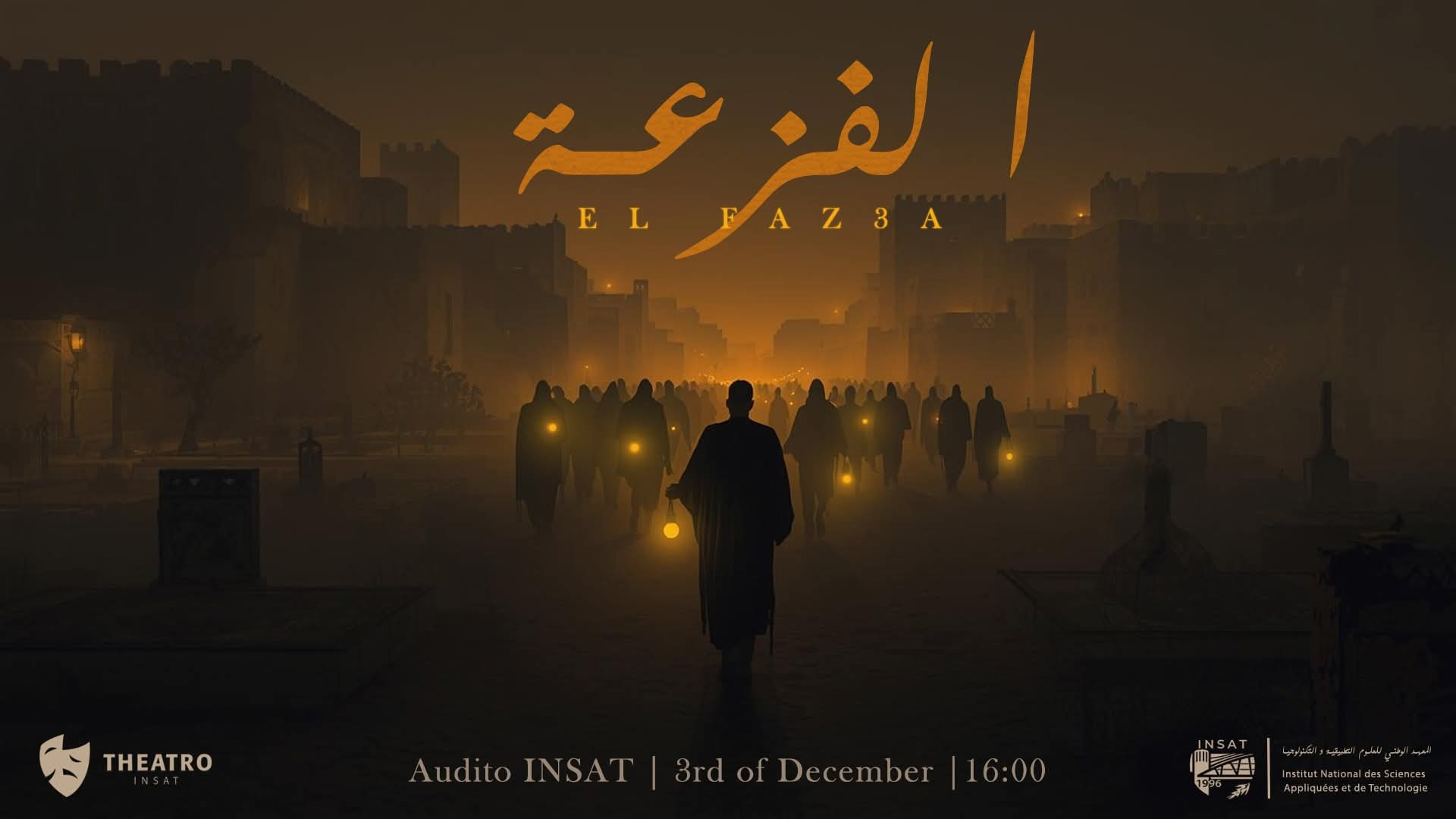

الفزعة: صرخة مسرحية تكشف ما يخشاه الصمت

Published

2 mois agoon

7 décembre 2025 [simplicity-save-for-later]

ألج الغرفة بنار من الفضول تتوهّج في داخلي، فتحرق ضلوعي. يداعب وجهي دخان ثقيل؛ أهو دخان الغموض المكتوم، أو الفقد المحتوم، أو الفناء المنبوذ؟ أهو خلاصة الغضب المكبوت، أو الحزن المأثور، أم الهدوء المنشود؟

أجلس بعيون شاخصة في مقعد كدت أستعذب راحته لولا طواف أجساد بدت ساكنة رغم خطواتها المترددة، أو ربما هي ساكنة في واقع غير الذي نعيشه. كانت شبيهة بأشباح كُسيت ثياباً سوداء؛ لعلها رمز الخوف، بل هي رمز الحداد… أو لعلها ظلّ السلطة، تطوف بلا هوادة على درجات القاعة الظلماء، تحمل ضوءاً أبيض خللته لوهلة الحقيقة في ثوبها الناصع.ء

نطقت « الأشباح » لفظاً واحداً: « يا نبيل ». هنا أكاد أجزم بأنها تبحث عن فقيد. ظلّت الأجساد تدور، متفرّسة في الوجوه. خطواتهم تعبث بأوتار العود فتبعث موسيقى توحي بالتوتر بقدر ما توحي بالتفتيش المُلحّ اليائس. الجو مشحون بموجة من الارتباك، فأجدني لا أنصت إلا لصدى صراخهم. أكاد أنتفض من مكاني فأصرخ معهم: « يا نبيل، يا نبيل اخرج، علّك تريح هذه الأرواح المفزوعة المعذّبة ».ء

بدأت الأضواء تتجمع في قلب الركح، كأنها تريد أن تصرخ معلنة أن ما يجمعها همّ واحد وهدف موحَّد، على ثلاث مستويات، فإذا بهم مدرّج ينحدر بنا نحو الحقيقة. « شبيكم نسيتوها؟ » تنطلق الجوقة في التقريع والتأنيب، وتمحو الغموض عن قرية هي الجنّة المنسية المتروكة، وتعرّفنا بخصال سكانها البسطاء على أنغام « l’amour est un oiseau rebelle ».

وبمقطع موسيقي راقص، تناثرت مشاعر التوتر لتحل محلها بهجة تلاعبت بها درجات الأضواء الصفراء. وهنا نشدّ الرحال لنكشف الستار عن مكنونات هذه الشخصيات التي بدت من خلال أزيائها وحركاتها وطباعها المختلفة والمشوقة.ء

يتقدّم نبيل في هذه المسرحية بصفته اسماً اختير بعناية ملحوظة، إذ تتجسد فيه قيم النبل والبذل وتتجذّر داخله مشاعر المحبة الصادقة والإخلاص. فهو الطبيب الذي حاول جاهداً أن يكون صورة المثالية: صبوراً، إنسانياً، عطوفاً، صوتاً للمصلحة العامة والمنفعة الشاملة. غير أن هذا النبل نفسه كان نقطة ضعفه، إذ خضع في لحظات عدة لوسوسات المشاعر وهفوات العواطف، خاصة تجاه أسرته التي أحبها حباً شديداً، فكان انسياقه وراء أحاسيسه سبباً في النهاية المأساوية التي لقيها.ء

وقد بدا جلياً منذ بداية المسرحية حين فضّل مساندة شقيقه علي، متغافلاً عن الخطر الذي يمثله منتجعه، فدفع ثمن هذا التجاهل غالياً. وأكثر ما رسّخ صورته في الذهن سترته البيضاء التي لم يتخلّ عنها حتى برفقة أسرته، كأنها رمز دائم للوفاء والصدق وتذكرة بأن مصلحة الجميع مسؤوليته. ء

وعلى طرف النقيض يقف علي، أخ الطبيب؛ الرجل المهمش المتقلب الذي يشكّل، في ذاته، جملة من المشاكل الاجتماعية والانهيارات النفسية. رغم محاولاته البائسة لإثبات وجوده، فإن استخفاف الجميع به وتجاهلهم زرع داخله شعوراً مريراً بالاستنقاص والاحتقار. وما إن حقق نجاحاً بسيطاً حتى تحول هذا النجاح إلى الشعلة التي غذّت داخله رغبة عمياء في الانتقام؛ فأصبح المنتجع وسيلته لنيل رضا الناس واهتمامهم، لا لأجل منفعة حقيقية، بل ليعوّض سنوات النبذ والنسيان والانكسار.كان يدرك تماماً حجم الأذى الذي يتسبب فيه للأرواح البريئة، لكنه كان يرضي ضميره المتألم بتبريرات واهية من قبيل أنه « يخدّم في برشا ناس ». في تلك اللحظة يتجسد علي كتجسيد رمزي للشركات التي تحتكر الأسواق التونسية وترهب الدولة لمجرد أنها تشغّل الآلاف. ومع السلطة التي حازها، صار يحكم فلا ينصف، ويأمر فلا يعدل. الثروة والجاه أعمياه عن قيمة الإنسان، وعن قيمة أسرته، وعن حقيقة نفسه؛ فكان جبنه وخوفه مخفيَّين خلف حجاب من المكر والتحيّل. وعن طريق المداهنة والتصنع استغلّ مشاعر الآخرين و نبلهم. أما علاقته بنبيل فكانت علاقة قائمة على النفور والغيرة القاتلة المتجذرة في ذكرياته، وقد برز ذلك عبر أضواء الركح التي فصلتهما إلى حلقتين متباعدتين من النور، مجسّدة في جوهرها قصة قابيل وهابيل: أخ يغدر بأخيه الذي مدّ له يد العون، فتطاله لعنة الندم والانهيار إلى الأبد… دلالة على هشاشة الملهيات المادية أمام صلابة الروابط الإنسانية. ء

هذا النزاع الملحمي كان قابلاً أن يُمحى بتدخل شخص واحد، هو الترياق السحري الذي يشيد جسراً سرمدياً بين نبيل وعلي: للا البية. بدل أن تكون البرد والسلام الذي يطفئ نار ابنها الأكبر المستعرة، كانت سبباً في أن تزيد الطين بلّة وتوسع دون قصد الهوّة بين ولديها. رغم ما مرت به من صعوبات، إلا أن دروس الحياة لم تكن كافية لتغييرها؛ فهي التي عاشت على الكفاف مع زوجها الأول، لم ترضَ بسوسن زوجة لابنها، فرفضتها بقسوة واحتقار وتجاهلت مشاعرها. ء

للا البية كالغيمة السوداء التي تدرّ غيثاً نافعاً ولكنها تحجب الشمس؛ فهي تُعنى بأبنائها، لكن كتمها الدائم وعدم تواصلها الفاعل معهم خلقا جواً من البغض والكراهية. وما هذا إلا نتاج غياب الإحاطة اللازمة والمساندة النفسية المطلوبة منها. كونها أماً، كانت قادرة على السيطرة على الوضع منذ البداية، لكن تأنيب الضمير الذي يقضّ مضجعها تجاه ابنها الأكبر كان المانع الوحيد. ء

من قلب الظلام والعتمة يبرز نور الأمل؛ من صميم عالم تحكمه الشرور يختفي الخير في خيال سوسن. شخصية قوية بمبادئها، قيمها أعظم وأجل من أن تدهسها الأطماع رغم المغريات. هذا الملاك الطاهر لم يتخلّ لحظة عن أخلاقه وتمسك بأسرته، لكنه وُظّف بالتوازي لنقد الطباع الأزلية الطريفة التي تلازم المرأة: النميمة والفضول المفرط. ء

حول هذين الأخوين تتحرك بقية الشخصيات في دوائر متشابكة. نبيلة، زوجة نبيل، تمثل الوجه الآخر لنجاة؛ فكلاهما وجهان لعملة واحدة اسمها: المادة. نبيلة، بقلب قاسٍ ميت وروح داكنة لم تعرف الحب يوماً، اعترفت بأنها لم تُحب نبيل قط، وأنها رضيت بالزواج منه فقط لأنه طبيب مثقف ذو مكانة. هذا الموقف يلخص كل شيء فيها: محتقرة، أنانية، مستغلة، لا ترى الناس بجوهرهم بل بمظاهرهم. المفارقة أنها معلمة تنشئ أجيالاً؛ وكأن في شخصيتها نقداً عميقاً لواقع يصير فيه المربي متغطرساً يرى قيمته فيما يجمعه من مال لا فيما يزرعه من قيم. ء

إلى جانبها ترتسم نجاة كامرأة مادية، لا ترى قيمتها إلا في زينتها وجمالها. صورة صارخة لغريزة أنثوية تعيش في تحدٍّ دائم مع الأخريات. طوال العرض تحاول مجاراة نبيلة، وقد جعلت من سعادتها رهينة تجاوز نموذج وضعته هي معياراً للنجاح. هوسها بالجمال أنساها الأخلاق، فقبلت بالمنتجع رغم معرفتها بالخطر، فبدت كمن يسير خلف البريق ناسياً الحقيقة المظلمة خلفه. ء

يقف خلف نجاة بروز صطورة، أو إن صحّ القول: الضحية الأولى لأنانية نجاة العمياء. هو باختصار صورة تقليدية للرجل الذي يسعى في جهد جهيد لإرضاء زوجة لا ترضى. علاقته بزوجته مثال مصغر لعائلة تونسية، عوض تكوين جو عائلي دافئ تخلق محيطاً من التنافر والتباعد، وهذا ما ينعكس تماماً في الطباع التي ينقلها الأبناء. فمنير، الذي في ظل غياب التأطير والإحاطة، صار نموذجاً حياً للمشاكسة والاختلال. ء

هذه العلاقة الزوجية الفاترة قائمة على ثنائية: الطلب الملح المتواصل، وتحقيق الطلب، وإن كان لا منطقياً، مستحيلاً. ء

ما شد انتباهي أيضاً أن هذا الدور، رغم غايته الكوميدية، رسم بإتقان ملامح الرجل التونسي النمطي. فصطورة، الذي يرى في داخله أنه عاجز عن إرضاء زوجته، يتجه بلا تردد إلى إخفاء هذا العجز بالتودد والتقرب من غيرها من النساء، فكأنه يسعى للبحث عن قيمته في مكان غير مكانها. فحقيقته حتماً تنبع من داخله لا من رضا الناس. ء

النقص الذي يعيشه صطورة يتحول لا إرادياً إلى تعنيف متواصل، برز خاصة في علاقته بخنيفس؛ شاب رغم سذاجته يحمل طموحاً. بغض النظر عن محاولاته البائسة في أن يكون فاعلاً، إلا أن استخفاف الجميع به يخلق في داخله نوعاً من الاستنقاص والاحتقار لذاته، فيبقى بذلك عاجزاً عن تحقيق وجوده وماهيته. كان حضوره طريفاً، تماماً كحضور رجب الذي يخفي داخله جانباً مكسوراً. بضحكاته هو قادر على تزييف قناع من القوة والمسؤولية، يتفانى في تصميمه مستعيناً بنزعته الكوميدية التي باتت أكبر برهان على وحدته التي تضعفه وخوفه الذي يخفيه. ء

رجب تجسيد آخر للبعد المادي في المجتمع؛ كيف لا وهو يعنى بسيارته وعنايته بزوجته، وهذا دليل قاطع على قيمة « الميكانيك » على حد تعبيره، والأولوية الكبرى التي يوليها له. يختفي رجب ليترك الأضواء للزربوط. شدّني هذا الدور بشدة؛ فالزربوط بدا فكاهياً من درجة رفيعة، لكنه في ذات الحين وثّق بامتياز ثلاثة خصال في شبابنا: الجهل، التكاسل، والانحراف. ء

الزربوط، الذي يجهل المغرب، بل لا يكتفي بجهلها ويتعرف عليها على أنها « بلاد الشيخة »، جسّد بامتياز التيه والضياع الذي يعانيه الشباب التونسي. رغم أنه يتظاهر بالتجارة والعمل، إلا أنه يرفض قبول عرض صطورة المغري، ويرضى بمصدر رزقه اللامضمون بخلاً وتعنتاً. ملابسه تصديق لطباعه؛ فهو يرتدي رداء نوم يخفي فيه أغراضاً لا معنى لها يبيعها. ء

كان الحضور الشبابي واضحاً كذلك بظهور حفّار القبور، الذي يعكس تطبيقاً فعلياً للاستنزاف واللاإنسانية. فالإنسان يفقد قيمته حتى وهو جثة هامدة جامدة؛ جثث تُسلب ممتلكاتها قبل أن تُدفن، في قبور تُحجز لمن يدفع أكثر. أي على الأصح: إن لم تتصدر قائمة الواهبين الأثرياء، فلا مكان لك حتى بين الأموات. اسمه يوحي بدناءته وطمعه؛ فهو يسلب الموت حرمته ونواميسه، بل ويتمادى في انتهاك حدوده وقوانينه. لذا، بلا شك، كان المنتجع بالنسبة له استثماراً هاماً في سوق الأموات، يتزايد من خلاله الإقبال على المقابر والطلب على المدافن. ء

أمام الخطر الداهم الذي بات يهدد راحتها، انتفضت الشخصيات واتحدت للتخلص من المنتجع. لكن كلمة واحدة من العمدة كانت كفيلة بأن تثني عزائمهم. من خلال هذا المشهد يبرز نجيب وسماح، صحفيان يتزاحمان لسرقة الأضواء. ء

بدت سماح مرآة تعطي بتفانٍ اللامسؤولية وغياب الاحترافية؛ الصحافة بالنسبة لها مورد رزق ومجال للتعبير والترويح عن الذات. بكل عفوية تبث للمتقبل مواضيع لا معنى لها، ثرثرة مشتتة تلهي ولا تنفع. ء

أما نجيب، فالصحافة عنده تفقد مصداقيتها ونبلها، فيصبح هدفها الأسمى ومسؤوليتها الأولى: إخراس أصوات الحرية، وإبادة المعارضة والرفض. قد تبدو عليه احترافية، لكنها زائفة؛ بلا أساس. الشفافية والصدق قوام الصحفي وروح علاقته بالشعب. بغيابهما يغدو مداهناً متملقاً، ضبعاً ينتظر بقايا الأسود حتى يعيش. لذا، يعتبر نجيب نقداً صريحاً ومباشراً لواقعنا الذي نعيشه: لا صحافة تمثلنا ولا إعلام ينصفنا. ما شدني أيضاً هو الأساليب التي يوظفها نجيب لإخفاء الحقائق: اختيار متكلم بعينه ليمثل المظاهرة، وتشتيت المتقبل بالانتقال من المشاكل الحقيقية لمواضيع أخرى تافهة لا شأن لها بالمصلحة العامة. ليس إلا تلاعباً بالشعب وإلهاءً مستفزاً ومسكناً لردات أفعاله وأفكاره. ء

آخر الشخصيات ظهوراً أشدّها وطأة: رباعي الاستبداد، الذي يمثل دائرة سجنت في داخلها أرواحاً معذبة، محطمة، لا شفاء لدائها، وأنّى لجراح القهر أن تندمل؟

ينطلق الإعصار الدائر المنتفض من دليلة، امرأة رضيت أن تُنعت بالجنون وما هي بمجنونة، ولكنها جعلت من الجنون وسيلة لنحت عالم تكون فيه الأحلام كالأوهام، والأحزان كالأشباح. أمّ فجعت بفقد ابنيها، حُرمت حتى من رثائهما وبكائهما. تحت وقع الصدمة لم تتقبل الواقع، وفرّت نحو عالمها الخاص، حيث يجتمع شملها بأبنائها رغم مسحة الجنون التي تتخفى خلفها. بدت الأكثر تعقلاً، فعلى لسانها اعتنقت الحقيقة: « الفلوس وسخ الدنيا يا عمدة ». كلمة ظلت تكررها كأنها تحذر علياً من الوقوع في هوّة الجشع. ء

يواصل الإعصار الدوران فيبرز فتى مكبل مظلوم. القضاء عماد الدولة؛ إذا دُكّ أساسه انهار العدل وإنصاف المظلوم. الصدق في الحكم وإغاثة المظلوم، بغيابهما، يُظلم البريء ويطغى الظالم، ويأفل النور ليترك مكانه لديجور أبدي قاتم. ء

هذا الفتى سُجن ظلماً رغم براءته الصارخة. قاضٍ عاجز عن قول كلمة الحق، ينطق بكذب معتم فاقداً ذمته وكرامته. قاضٍ تحت التهديد والترهيب يحكم عليه بسجن مؤبد قاهر. أليس هذا واقعنا؟

تأبى « الفزعة » الوقوف، وتقذف بأمّ حُرمت أسرتها؛ ضحية تسمم أودى بحياة جنينها وزوجها. أُهينت الروح البشرية حتى تُهدر عبثاً. قد يترك الإنسان كنوز الدنيا وجوهرها ويتشبث بقشة نجاة هي قليل القليل في سبيل سعادته، فإذا ما سُلبت غدت الدنيا ظلماء قاهرة. بألم وحرقة ودموع حارة حارقة تبكي الأم فقدانها، وتوجه أصابع اللوم والاتهام للسلطات التي سمحت لمياه المجاري أن تخترق أنظمتنا الغذائية بطريقة غير مباشرة. ء

ثم على أنغام العود المربكة تدور الدوامة، وتبرز فتى شوّه وجهه ينعى بألم العمال الذين تُسلب صحتهم بغياب سبل الحماية وتُهضم حقوقهم ويُطالبون بالسكوت. أليس هذا القتل بعينه؟ بل هو قتل للروح وترك للجسد عائماً تائهاً حتى ننعته بالمجنون. هي حلقة تُسجن فيها الأجساد في انتظار من يحررها. فهل من مجيب؟

بدا لي هذا الرباعي في تحركه الدائري كإعصار هائج، بل هو سطح راكد يحمل زوبعة غاضبة تنتظر اللحظة التي تفتك فيها بمن حولها فلا ترحم أحداً. ء

كانت المسرحية مزيجاً من الكوميديا الهازئة الساخرة والتراجيديا المؤلمة القاهرة. كانت الأضواء مؤثرة؛ فدرجات الأصفر كانت باعثة للبهجة والألم في بعض المواقف، والأحمر للإحساس بالذنب والندم، دون أن ننسى درجات الأزرق التي أضفت نوعاً من البرودة والحزن. إلى جانب الأضواء، كان للموسيقى دور في توجيه مجرى النقد؛ فالأغاني ذات الصبغة الوطنية عبرت عن مقدار الألم الذي نحمله في أعماقنا تجاه الحال الذي غدونا فيه، جامع الغناء والرقص بين الجانب الغربي والشرقي، مما جعل العمل الفني موجهاً لمختلف الفئات. ء

الأزياء كانت عاكسة لطباع كل شخصية، مما مكّن من فهم معمق لمجرى الأحداث. أما بالنسبة للتوضيب الركحي، فقد كان حضور الممثلين بارزاً، وتوزيعهم متناسقاً متماسكاً، وربما ذو تأثير غير مباشر على المشاهدين: المستويات الثلاث، الجوقة، التقابل الدائم توحي بالتصادم بين عليّ ونبيل. ء

كانت مشاعري وعواطفي تنساق في انسياب بين خفة الكوميديا وحرقة التراجيديا. أن تشاهد ألم الواقع معروضاً أمامك مريح ومربك في الوقت ذاته؛ فأنت أمام الصورة القبيحة لمجتمع خبرته وأحببته. هزّت أوصالي قشعريرة في مختلف المواقف وامتزجت أحاسيسي. لكن ما كنت أوقن وجوده في داخلي هو الفخر بانتمائي للمعهد الوطني للعلوم التطبيقية والتكنولوجيا، حيث يمتزج الجد بالهزل، والإبداع بالحياد. فلا تستطيع إلا أن تشاهد العرض برضا وتشوق. ء

من خلال التناسق الواضح بين الممثلين والتقنيين، يمكنك أن توقن أن عملاً بمثل هذا الإتقان لا يكون إلا لقاح صداقة متينة تجمع بين أعضاء « تياترو ». لا يسعني إلا أن أسأل بحماسة وفضول: ماذا تجهزون للعرض القادم؟

Share your thoughts